Datasets

The datasets here have been assembled and made publicly available during Wilma's research career. Note that most of my publications also now have data available made via the Open Science Framework - please check out my publications list for direct links for each manuscript. Please cite the corresponding publication when you use any of these datasets.

|

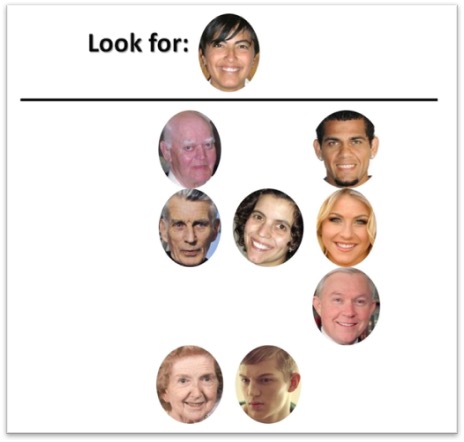

10k US Adult Faces Database A collection of memorability scores, attribute rankings, and demographic information based on an experiment run with 10,168 natural face photographs that follow demographics of the 1990 US Census. It also includes a program to easily navigate the 10,000+ image dataset to create custom image sets based on memorability or several other attributes. Citation: Bainbridge, W.A., Isola, P., & Oliva, A. (2013). The Intrinsic Memorability of Face Photographs. Journal of Experimental Psychology: General, 142(4), 1323-1334. |

|

Repository for Big Data in the Psychological Sciences This is a repository of Big Data collected by undergraduate students in the University of Chicago class Big Data in the Psychological Sciences. Topics span a diversity of questions, including questions on social psychology, decision making, reactions to the covid-19 pandemic, the replication crisis, and representation in psychology. Citation: Bainbridge, W.A., & Schertz, K.E. (2021). Big Data in the Psychological Sciences @ UChicago. Retrieved from osf.io/hz843. |

|

Memory Drawing Data and Scoring Experiments A collection of 2,600+ drawings of scenes done from memory, perception, and category schemas, as well as Amazon Mechanical Turk scripts for scoring these drawings. Citation: Bainbridge, W.A., Hall, E.H., & Baker, C.I. (2020). Highly diagnostic and detailed content of visual memory revealed during free recall of real-world scenes. Nature Communications, 10, 5. |

|

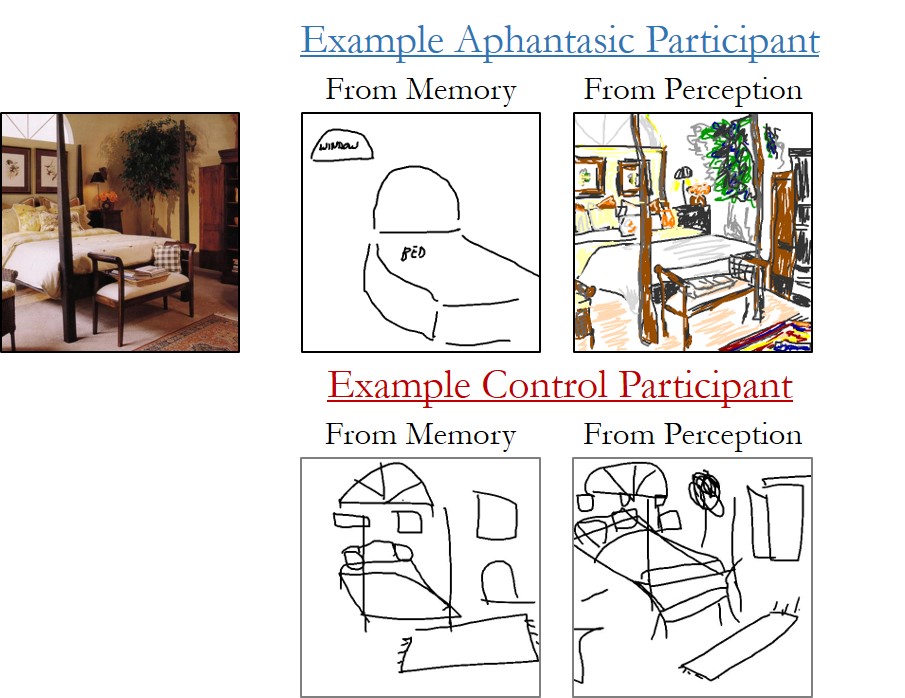

Aphantasic drawing database A dataset of memory drawings, perception drawings, drawing movement data, and broad demographics for 61 aphantasic and 52 typical imagery participants. Citation: Bainbridge, W.A., Pounder, Z., Eardley, A.F., & Baker, C.I. (2021). Quantifying Aphantasia through drawing: Those without visual imagery show deficits in object but not spatial memory. Cortex, 135, 159-172. |

|

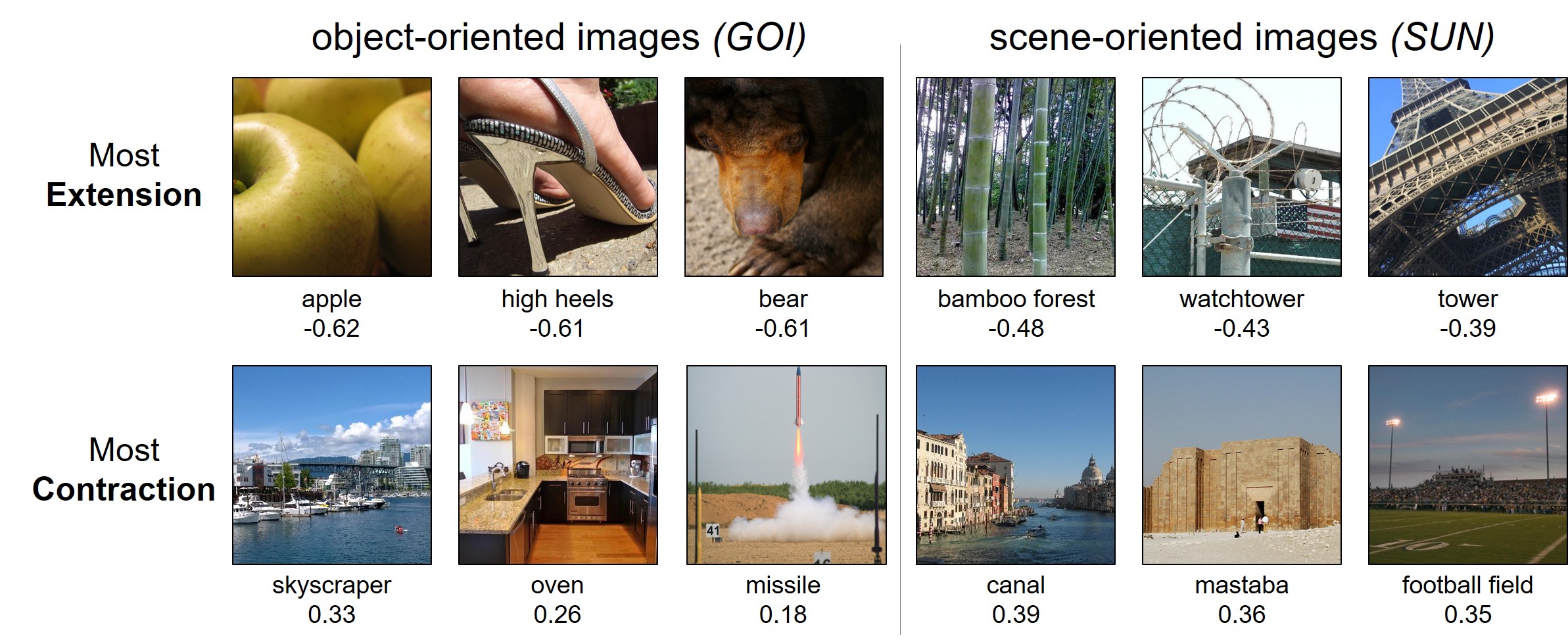

Large scale boundary transformation database A database of boundary transformation scores across 1,000 images and 2,000 participants, with quantified image properties. Citation: Bainbridge, W.A. & Baker, C.I. (2020). Boundaries extend and contract in scene memory depending on image properties. Current Biology, 30. |

|

Stimuli & Memory Drawings during disrupted object-scene semantics A collection of stimuli that manipulate object-scene semantics and corresponding drawings from memory. Citation: Bainbridge, W.A, Kwok, W.Y., Baker, C.I. (2021). Disrupted object-scene semantics boost scene recall but diminish object recall in drawings from memory. Memory & Cognition. |

|

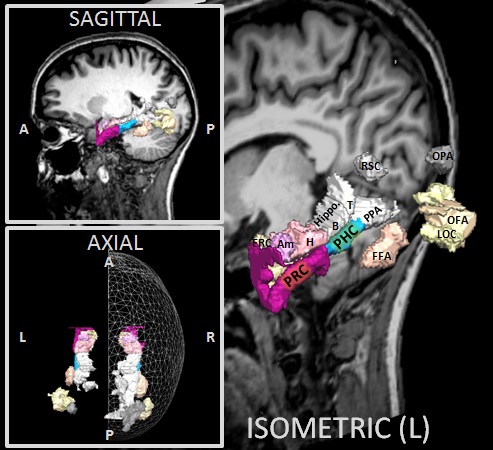

MTL & VVS ROI Collection Anatomically defined medial temporal lobe (N=40) regions and functionally defined ventral visual stream regions (N=70). Citation: Bainbridge, W. A., Dilks, D.D., & Oliva, A. (2017). Memorability: A stimulus-driven perceptual neural signature distinctive from memory. NeuroImage, 149, 141-152. |

|

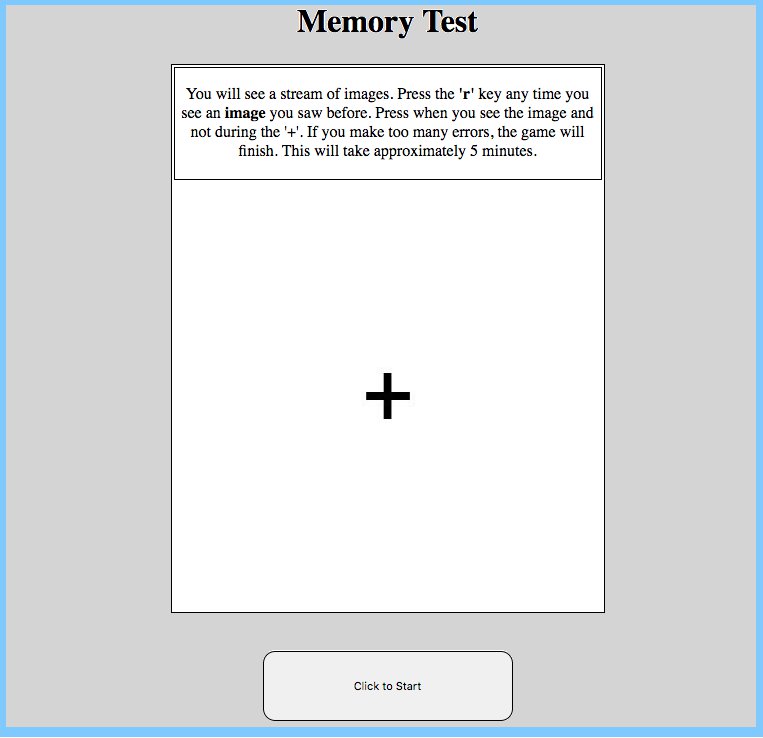

Memorability Experiment Maker A tool that makes web-based memorability experiments for your stimulus set. Citation: Bainbridge, W. A. (2016). The memorability of people: Intrinsic memorability across transformations of a person's face. Journal of Experimental Psychology: Learning, Memory, and Cognition. Advance online publication. |

|

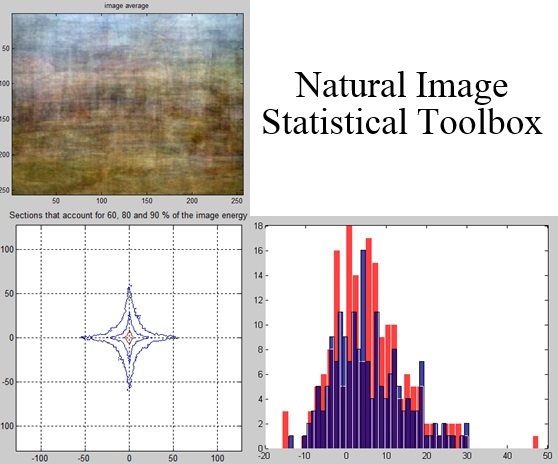

Natural Image Statistical Toolbox for MATLAB A set of MATLAB scripts that allows you to measure and compare stimulus sets, to ensure they are controlled for on various low-level visual properties, such as spatial frequency, color, and symmetry. Citation: Bainbridge, W.A., & Oliva, A. (2015). A toolbox and sample object perception data for equalization of natural images. Data in Brief 5, 846-851. |

|

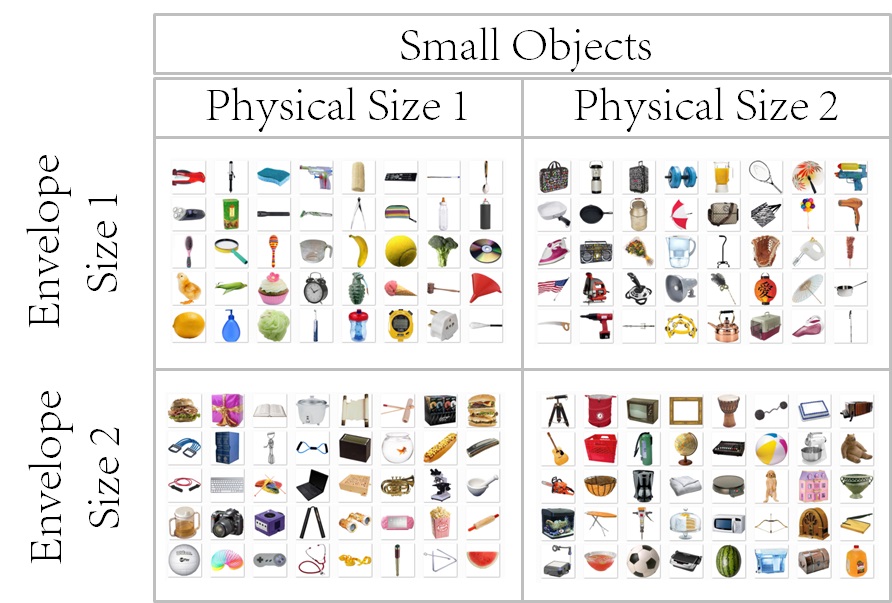

Object Interaction Envelope Stimuli A set of stimuli for interaction envelope experiments, controlled for physical size and several other object properties. Citation: Bainbridge, W. A. & Oliva, A. (2015). Interaction envelope: Local spatial representations of objects at all scales in scene-selective regions. NeuroImage 122, 408-416. AND Bainbridge, W.A., & Oliva, A. (2015). A toolbox and sample object perception data for equalization of natural images. Data in Brief 5, 846-851. |

|

Psytoolkit Psychophysics Scripts A collection of scripts for classical psychophysical studies written for Psytoolkit. Citation: Bainbridge, W.A. (2017). The resiliency of memorability: A predictor of memory separate from attention and priming. arXiv. |

|

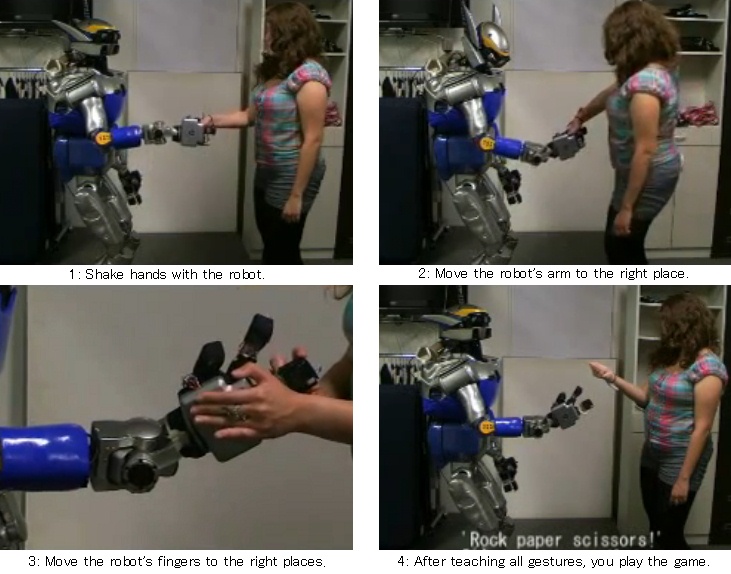

HRI Sensor Data. A collection of sensor data from three different human-robot interaction studies, with multiple users, sensors, and behavioral and survey-based measures. Citation: Bainbridge, W. A., Nozawa, S., Ueda, R., Okada, K., & Inaba, M. (2012). A methodological outline and utility assessment of sensor-based biosignal measurement in human-robot interaction. International Journal for Social Robotics, 4, 303-316. |